HTTPS behind your reverse proxy¶

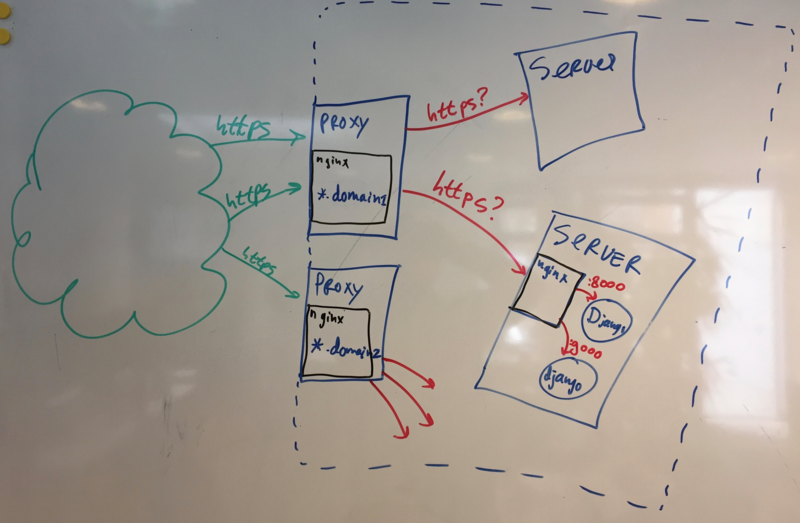

We have a setup that looks (simplified) like this:

HTTP/HTTPS connections from browsers (“the green cloud”) go to two reverse proxy servers on the outer border of our network. Almost everything is https.

Nginx then proxies the requests towards the actual webservers. Those webservers also have nginx on them, which proxies the request to the actual django site running on some port (8000, 5010, etc.).

Until recently, the https connection was only between the browser and the main proxies. Internally inside our own network, traffic was http-only. In a sense, that is OK as you’ve got security and a firewall and so. But… actually it is not OK. At least, not OK enough.

You cannot trust in only a solid outer wall. You need defense in depth. Network segmentation, restricted access. So ideally the traffic between the main proxies (in the outer “wall”) to the webservers inside it should also be encrypted, for instance. Now, how to do this?

It turned out to be pretty easy, but figuring it out took some time. Likewise finding the right terminology to google with :-)

The main proxies (nginx) terminate the https connection. Most of the ssl certificates that we use are wildcard certificates. For example:

server { listen 443; server_name sitename.example.org; location / { proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_set_header Host $http_host; proxy_set_header X-Forwarded-Proto https; proxy_redirect off; proxy_pass http://internal-server-name; proxy_http_version 1.1; } ssl on; .... ssl_certificate /etc/ssl/certs/wildcard.example.org.pem; ssl_certificate_key /etc/ssl/private/wildcard.example.org.key; }Using https instead of http towards the internal webserver is easy. Just use https instead of http :-) Change the proxy_pass line:

proxy_pass https://internal-server-name;

The google term here is re-encrypting, btw.

The internal webserver has to allow an https connection. This is were we initially made it too hard for ourselves. We copied the relevant wildcard certificate to the webserver and changed the site to use the certificate and to listen on 443, basically just like on the main proxy.

A big drawback is that you need to copy the certificate all over the place. Not very secure. Not a good idea. And we generate/deploy the nginx config for on the webserver from within our django project. So every django project would need to know the filesystem location and name of those certificates… Bah.

“What about not being so strict on the proxy? Cannot we tell nginx to omit a strict check on the certificate?” After a while I found the

proxy_ssl_verifynginx setting. Bingo.Only, you need 1.7.0 for it. The main proxies are still on ubuntu 14.04, which has an older nginx. But wait: the default is “off”. Which means that nginx doesn’t bother checking certificates when proxying! A bit of experimenting showed that nginx really didn’t mind which certificate was used on the webserver! Nice.

So any certificate is fine, really. I did my experimenting with ubuntu’s default “snakeoil” self-signed certificate (

/etc/ssl/certs/ssl-cert-snakeoil.pem). Install thessl-certpackage if it isn’t there.On the webserver, the config thus looks like this:

server { listen 443; # ^^^ Yes, we're running on https internally, too. server_name sitename.example.org; ssl on; ssl_certificate /etc/ssl/certs/ssl-cert-snakeoil.pem; ssl_certificate_key /etc/ssl/private/ssl-cert-snakeoil.key; ... }

An advantage: the django site’s setup doesn’t need to know about specific certificate names, it can just use the basic certificate that’s always there on ubuntu.

Now what about that “snakeoil” certificate? Isn’t it some dummy certificate that is the same on every ubuntu install? If it is always the same certificate, you can still sniff and decrypt the internal https traffic almost as easily as plain http traffic…

No it isn’t. I verified it by uninstalling/purging the

ssl-certpackage and then re-installing it: the certificate changes. The snakeoil certificate is generated fresh when installing the package. So every server has its own self-signed certificate.You can generate a fresh certificate easily, for instance when you copied a server from an existing virtual machine template:

$ sudo make-ssl-cert generate-default-snakeoil --force-overwrite

As long as the only goal is to encrypt the https traffic between the main proxy and an internal webserver, the certificate is of course fine.

Summary: nginx doesn’t check the certificate when proxying. So terminating the ssl connection on a main nginx proxy and then re-encrypting it (https) to backend webservers which use the simple default snakeoil certificate is a simple workable solution. And a solution that is a big improvement over plain http traffic!